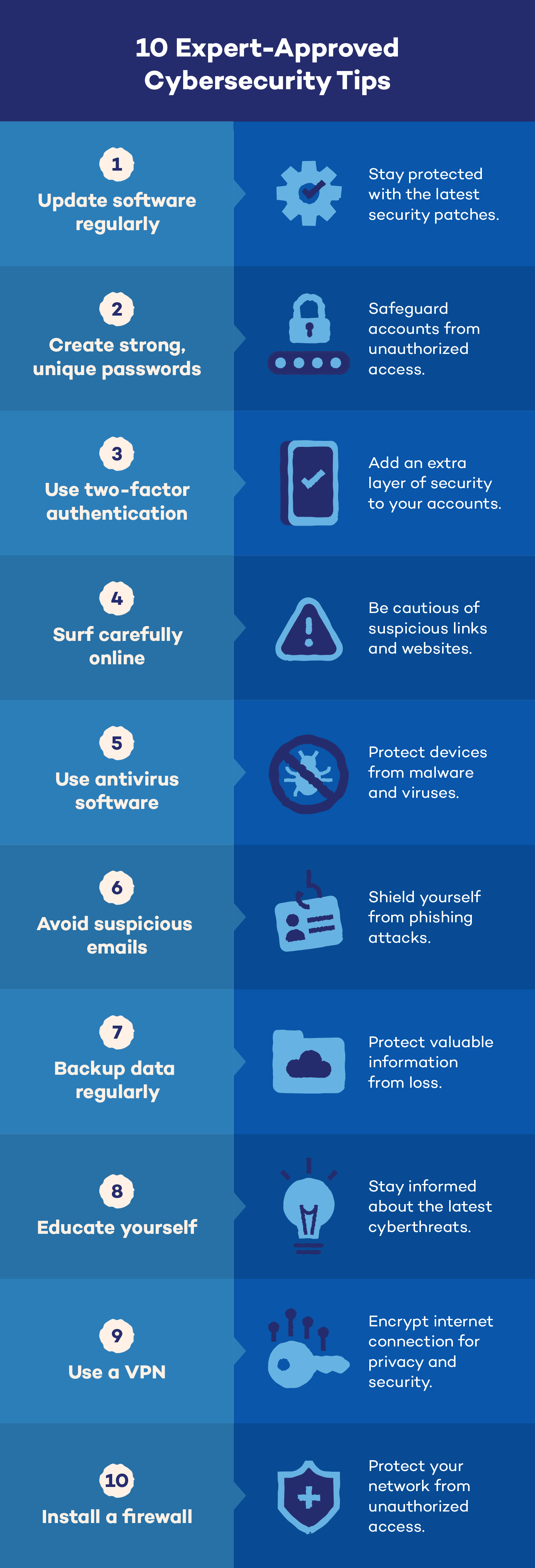

10 expert-approved cybersecurity measures:

- Update software regularly

- Create strong and unique passwords

- Use two-factor authentication

- Surf carefully online

- Use an antivirus software program

- Avoid suspicious emails

- Backup data regularly

- Educate yourself

- Use a VPN

- Install a firewall

Cybersecurity is not rocket science, but it can be intimidating for a person who isn’t well-versed in the field. The good news? You don’t need a degree to protect yourself online — just a bit of awareness and caution. Many cybersecurity measures experts use to shield themselves from digital threats are surprisingly simple and practical. Even CISA agrees with that!

From creating strong passwords to recognizing phishing scams, these everyday habits can significantly boost your online security. In this post, we cover 10 cybersecurity tactics you can follow to avoid online scams.

What Are Cybersecurity Measures?

Cybersecurity measures are actions implemented to safeguard computers, networks and data against cyberattacks.

These can include using software like antivirus and firewalls, as well as following best practices like strong passwords and being cautious of suspicious emails. In short, they’re protection measures to keep your digital world secure, providing robust cybersecurity protection against malicious actors.

10 Necessary Cybersecurity Measures

Safeguarding your online presence is as important as keeping yourself safe while offline. With cyberthreats constantly evolving, implementing robust cybersecurity measures is no longer an option but a necessity. The following 10 best practices in cybersecurity can help.

1. Update Software Regularly

Keep your operating system, programs and apps up-to-date on all your devices. Software makers constantly release updates to fix security holes. Install these updates as soon as possible. You can even set up automatic updates for added convenience.

2. Create Strong and Unique Passwords

While passwords are becoming less common, they’re still essential for many online accounts. For better protection, make sure each account has a unique, strong password.

Use a mix of uppercase and lowercase letters, numbers and symbols. Avoid using personal information or easily guessable words. Consider using a password manager, such as Panda Dome Passwords, to generate and store complex passwords securely.

3. Use Two-Factor Authentication

If you choose a secure password, you’re on the right path, but that still isn’t enough. Activate two-factor authentication (2FA) whenever possible. This extra security layer requires a code sent to your phone, making it significantly harder for hackers to access your accounts.

4. Surf Carefully Online

The internet is a vast ocean filled with treasures and dangers. While it offers endless possibilities, you should navigate carefully. Not every website is safe, and some hide malicious content.

Be cautious about where you click. If your browser flags a website as suspicious, heed the warning. Avoid sharing personal information on unverified sites. Also, always look for the padlock icon in the address bar to ensure a secure connection, especially when making online transactions.

5. Use an Antivirus Software Program

Although some doubt its effectiveness, what’s certain is that antivirus software with a firewall is the best barrier against attacks. Surfing the web without updated or reliable protection is an unnecessary risk that even the experts don’t want to take.

Choose a reputable antivirus program and keep it updated. Regularly scan your system for threats and follow the software’s recommendations.

6. Avoid Suspicious Emails

Phishing attacks often start with a deceptive email that can look legitimate, pretending to be from banks, online retailers or even friends. Such emails might include harmful links or attachments that can infect your device or access your personal information.

Be cautious of unexpected emails, especially those with urgent requests or suspicious attachments. Check the URL by hovering over links before clicking. If an email appears suspicious, delete it without opening. Authentic companies won’t ask for sensitive information through email.

7. Back Up Data Regularly

Regular backups are your safety net against unforeseen disasters. Whether it’s a cyberattack, hardware failure or accidental deletion, having a recent backup can save you from heartbreak and frustration.

Create backups of your important files and documents on an external hard drive, cloud storage or other reliable storage media. Set up a regular backup schedule and frequently test your recovery process to ensure it functions as intended.

8. Educate Yourself

Stay informed about the latest cybersecurity threats, like phishing scams, data breaches and ransomware. Understanding how these attacks work can help you spot red flags and protect yourself.

Follow reputable cybersecurity news sources, attend online webinars and participate in cybersecurity forums. Share your knowledge or learnings from cybersecurity education with others to create a more secure digital community.

9. Use a VPN

A VPN creates a secure tunnel for all your online traffic. It encrypts your data, making it unreadable to snoopers. You can hide your IP address, encrypt your data and bypass geo-restrictions to access content from different regions.

Try Panda Dome VPN to add an extra layer of protection to your online activities (especially when using public Wi-Fi networks) and avoid online scams.

10. Install a Firewall

A firewall is your network’s bouncer, guarding against unwanted intruders. It acts as a security barrier, monitoring and controlling incoming and outgoing network traffic. By carefully examining data packets, it blocks suspicious activity and prevents unauthorized access to your system.

It allows only authorized traffic to pass through, protecting you against a variety of threats, including viruses, malware and hackers. Most operating systems come with built-in firewalls, but consider using a third-party firewall for enhanced protection.

How Do Cybersecurity Breaches Happen?

Cybersecurity breaches are unfortunately becoming increasingly common. The consequences of these incidents can be disastrous for both individuals and organizations. Here are some of the most common ways these breaches occur:

- Phishing attacks: Phishing is a scam where attackers impersonate a credible entity to acquire confidential information. Cybercriminals often send emails or messages that mimic legitimate sources to trick victims into revealing passwords, credit card numbers or other personal data.

- Malware infections: Malware encompasses various types of harmful programs that can infiltrate computer systems. These can range from viruses and worms to ransomware and spyware. Infected emails, dubious websites and harmful downloads are the most common sources of malware.

- Weak passwords: A surprisingly high number of data breaches are attributed to weak or easily guessed passwords. Using simple passwords or reusing them across multiple accounts significantly increases the risk of unauthorized access.

- Unpatched software: Cybercriminals often exploit software vulnerabilities to gain entry into systems. Keeping software up-to-date with the latest patches is crucial for addressing these weaknesses and preventing attacks.

- Insider threats: In some cases, cybersecurity breaches originate from within an organization. Employees with malicious intent or accidental errors can compromise sensitive data. Insider threats present considerable risks because of their access privileges.

Given the ever-changing digital environment and the frequent emergence of new threats, staying up-to-date on the latest cybersecurity trends and best practices is key.

By combining vigilance, knowledge and the right tools like antivirus software — along with the cybersecurity tips we discussed — you can build a strong defense against cyberattacks and enjoy a safer online experience.

Cybersecurity Measures FAQ

Cybersecurity is a crucial aspect of protecting yourself from digital threats. With the increasing complexity of cyberattacks, understanding essential cybersecurity measures is vital. Here are answers to some common questions.

What Are the 5 Cybersecurity Control Measures?

Cybersecurity controls protect systems, networks and data. There are five main types:

- Preventive controls stop attacks before they happen (e.g., strong passwords, firewalls).

- Detective controls find attacks after they occur (e.g., intrusion detection, log monitoring).

- Corrective controls fix problems after an attack (e.g., data backups, cyber incident response).

- Deterrent controls discourage attacks (e.g., security cameras, legal policies).

- Compensating controls are backups if primary controls fail.

What Are Some Examples of Network Security?

Key examples of network security include firewalls, intrusion detection and prevention systems (IDPS), VPNs, access controls and network segmentation. They protect your computer networks from unauthorized access, use, modification or disruption.

Do You Need Cybersecurity Measures for Your Organization?

Absolutely! Regardless of your organization’s size or industry, cybersecurity is essential. Even small businesses and individuals can be targets of cyberattacks, and the consequences of a breach can be severe, including financial loss, reputational damage and legal liabilities.